Google is launching a fashion-related generative AI that aims to make virtual clothing try-ons more realistic. The company compares it to Cher’s closet preview tech in the movie “Clueless.”

This new tool will first be available in the US for brands like Anthropologie, LOFT, H&M and Everlane. Products that you can use this feature on will be labeled with a “Try On” button. Google says it intends to extend this to more in the future.

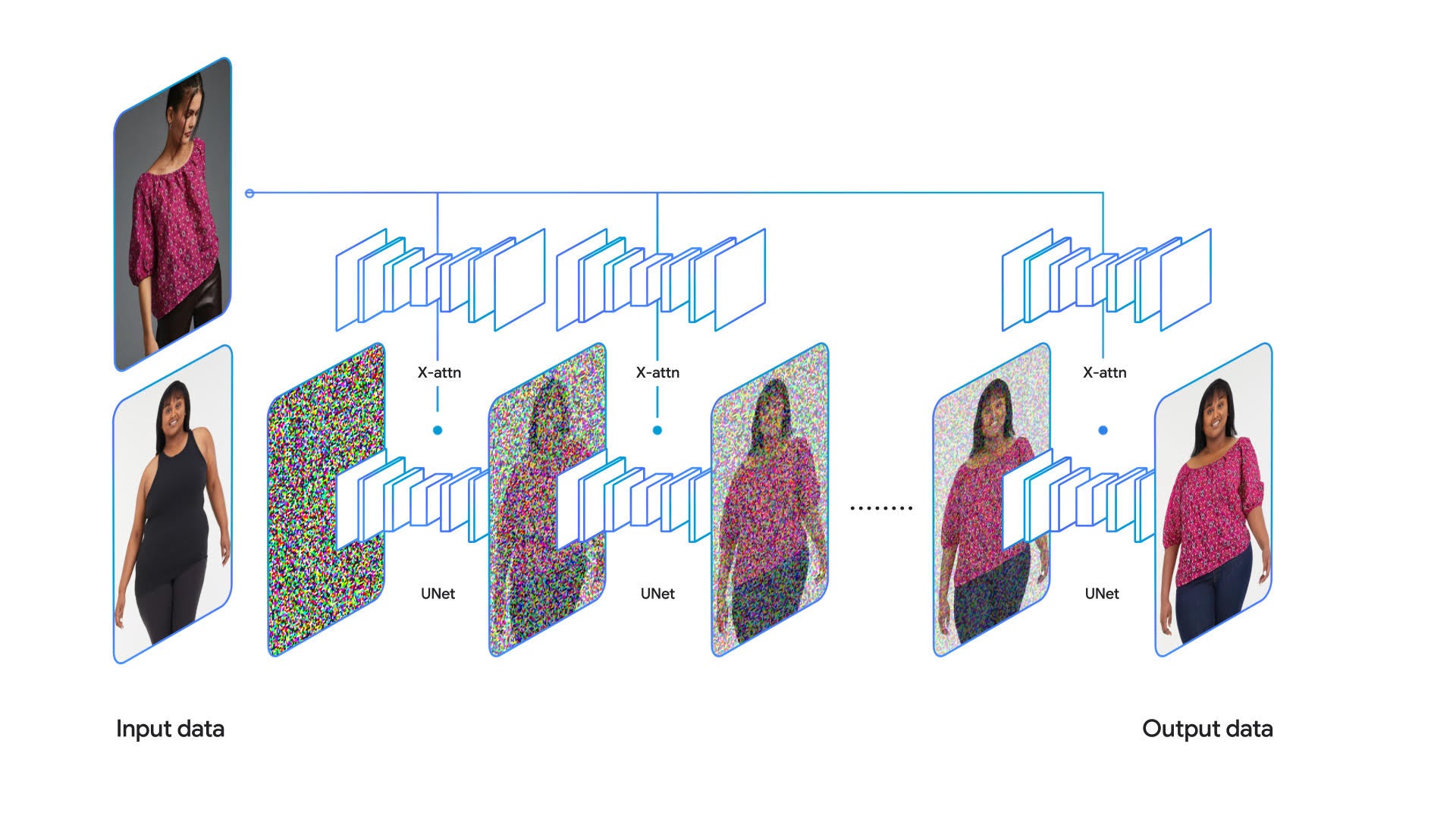

The tool doesn’t actually show how the clothes would look on you personally, but instead gives you a chance to find a model who you think does represent you physically. Building a tool that can mimic how real-life clothes drape, fold, cling, stretch and wrinkle starts with photographs of a range of real models with different body shapes and sizes. That way, shoppers can pick a model with a certain skin tone, or body type, and see how the outfit looks on that model. The centerpiece of the generative AI is a diffusion technique that combines properties in an image of a garment with another image of a person.

[Related: A guide to the internet’s favorite generative AIs]

“Diffusion is the process of gradually adding extra pixels (or ‘noise’) to an image until it becomes unrecognizable — and then removing the noise completely until the original image is reconstructed in perfect quality,” Ira Kemelmacher-Shlizerman, senior staff research scientist at Google Shopping explained in a blog item. “Instead of using text as input during diffusion, we use a pair of images…Each image is sent to its own neural network (a U-net) and shares information with each other in a process called ‘cross-attention’ to generate the output: a photorealistic image of the person wearing the garment.”

[Related: Bella Hadid’s spray-on dress was inspired by the science of silly string]

The tool, once it was built, was then trained on Google’s Shopping Graph, which houses some 35 billion products from retailers across the web. Researchers presented a paper describing this technique at the IEEE Conference on Computer Vision and Pattern Recognition this year. In their paper, the researchers also displayed how their images compared to current techniques like geometric warping and other virtual try-on tools.

Of course, even though it offers a good visualization for how clothes would look on a model that’s similar or helpful to the shopper, it doesn’t promise a good fit and is only available for upper body clothing items. For pants and skirts, users will just have to wait for the next iteration.

Google is also kicking off the summer with a collection of other new AI features across its platforms, including maps, lens, labs and more.

The post Google’s new AI will show how clothes look on different body types appeared first on Popular Science.

Articles may contain affiliate links which enable us to share in the revenue of any purchases made.

from | Popular Science https://ift.tt/TdYgmrh

0 Comments